Accessibility is not an afterthought. For me, it’s a baseline for quality software—especially in government and enterprise systems, where equitable access isn’t optional, it’s legally and ethically required.

In this post, I’ll share how I incorporate accessibility into development, the tools I use, and real-world examples from working with government Angular applications and a Cúram-inspired CRM platform.

1. Accessibility is Built Into My Workflow

From the first commit to the last PR, I apply accessibility-first principles. My goal is to design and build interfaces that work for everyone—from keyboard-only users to screen reader users.

Here’s what that looks like:

- Using semantic HTML (

<button>,<label>,<fieldset>) instead of generic<div>s and<span>s - Defining correct ARIA roles, landmarks, and focus management

- Structuring forms with proper

label,aria-describedby, and error state patterns - Ensuring color contrast meets WCAG 2.1 Level AA from the first prototype

- Testing all features with both keyboard navigation and screen readers

Accessibility isn’t something I “check at the end.” It’s part of how I write, refactor, and review code.

2. My Accessibility Testing Toolkit

I rely on a combination of automated tools and manual testing:

🔍 Automated Tools

- axe DevTools – Great for flagging missing labels, contrast issues, and structural errors

- Lighthouse (Chrome DevTools) – A fast and accessible way to run WCAG audits

- WAVE Evaluation Tool – Excellent for validating individual pages in static or server-rendered apps

- Playwright + axe-core – For headless accessibility testing in CI

import { test } from '@playwright/test';

import { injectAxe, checkA11y } from 'axe-playwright';

test('Homepage meets accessibility standards', async ({ page }) => {

await page.goto('http://localhost:3000');

await injectAxe(page);

await checkA11y(page);

});

🧪 Manual Testing

- Keyboard-only testing – Tab, Shift+Tab, Enter, and Escape to verify navigation, modals, and focus loops.

- Screen readers – Regular testing with NVDA (Windows) and VoiceOver (macOS) to ensure accurate announcements, skip links, and reading order.

- Zoom & reflow testing – Layouts are tested up to 200% zoom to meet WCAG 1.4.4 requirements and ensure proper reflow.

- Speech-based navigation – Workflows tested with Dragon voice commands to confirm compatibility for users relying on voice control.

3. Real-World Testing Example: Ontario Design System + Angular

While contributing to a government-facing Angular application built on the Ontario Design System, I encountered several accessibility issues:

- Modals lacked focus trapping — resolved using

cdkTrapFocus. - Form error messages weren’t screen-reader accessible — added

role="alert"andaria-describedby. - Heading structure skipped levels — refactored to maintain correct semantic hierarchy (

<h1>→<h2>→<h3>).

These issues were caught during manual NVDA testing, reinforcing that automated tools are valuable but not sufficient on their own.

4. Accessibility Is a Team Responsibility

Beyond testing and fixing issues myself, I actively promote accessibility practices across development teams.

In previous roles, I have:

- Created internal WCAG checklists for QA and developers

- Participated in code reviews with a focus on a11y best practices

- Provided design feedback to ensure color contrast and layout considerations meet compliance

- Documented accessibility behavior in component libraries and Storybook

This approach ensures that accessibility becomes a shared responsibility, not just a developer’s burden.

5. Continuous Learning

Accessibility standards and expectations evolve constantly. I keep up by exploring:

- WCAG 2.2 – Especially updates around focus visibility and input patterns

- WAI-ARIA – For building and testing complex widgets in SPAs

- Overlay limitations – Understanding why true accessibility must be built into the codebase, not patched afterward

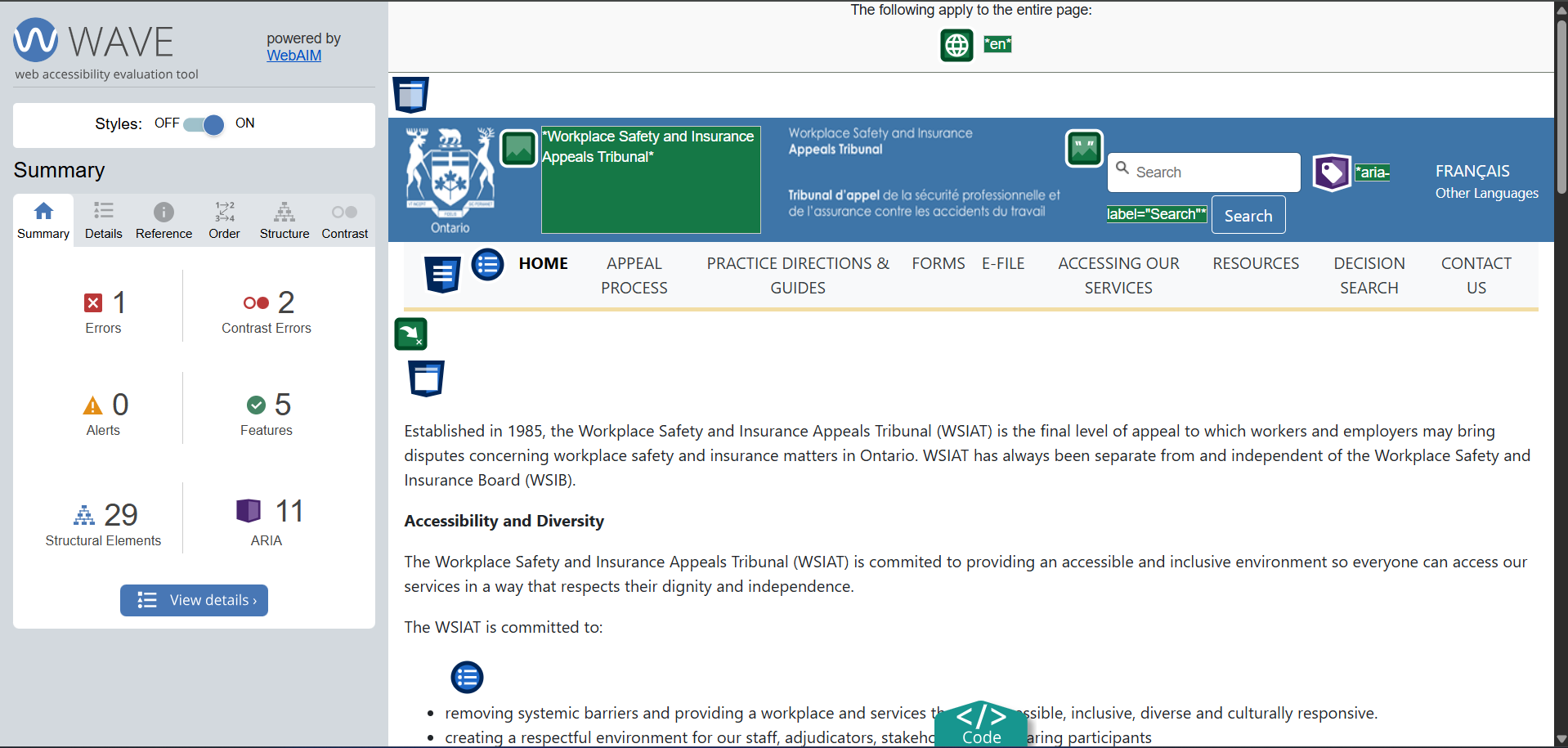

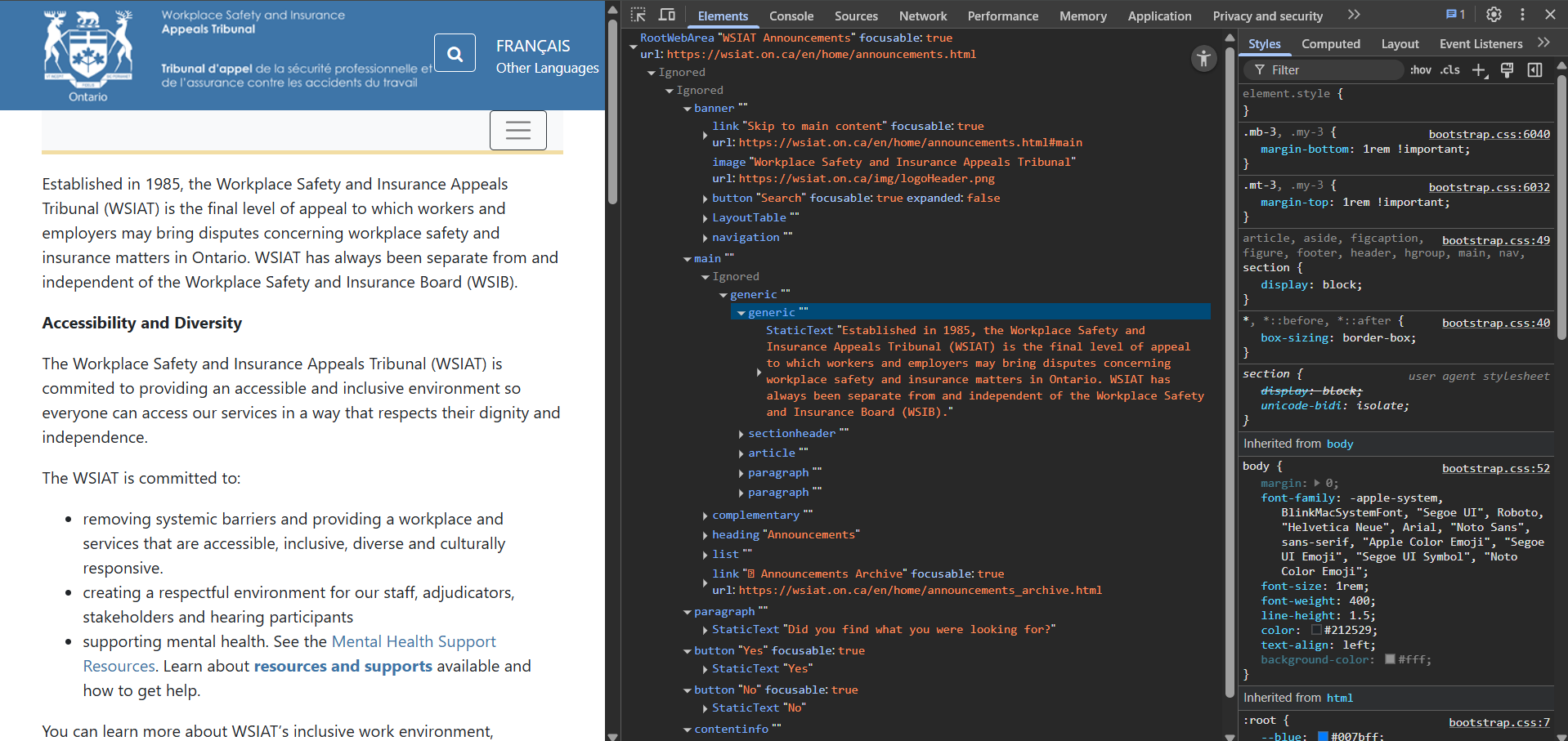

6. Field Study: WSIAT Web Accessibility Review

To stay current and prepare for accessibility-focused roles, I conducted a review of the WSIAT website. Here’s what I did:

- Ran a WAVE audit to find missing

alttext, landmark misuse, and contrast issues - Inspected the source code to validate heading structure, ARIA roles, and keyboard support

- Navigated the site using Dragon voice commands to test for speech-based accessibility

- Used Chrome DevTools Accessibility Panel to analyze the accessibility tree and tab order

Tool Screenshots

WAVE Accessibility Scan:

Chrome Accessibility Inspection:

💬 Final Thoughts

Accessibility testing is not a checkbox—it’s a commitment to inclusion, usability, and equity. Especially in the public sector, where services must reach all users, accessible software is foundational software.

Whether I’m building new systems or auditing legacy code, I bring an accessibility-first mindset to everything I do.

→ Let’s talk accessibility

📩 parbadid@mcmaster.ca